需要去爬取

中的儿童音频资源。

scrapy

中文教程:

英文教程:

Scrapy Tutorial — Scrapy 1.4.0 documentation

官网:

Scrapy | A Fast and Powerful Scraping and Web Crawling Framework

先去Mac下安装和配置Scrapy:

➜ scrapy pip install scrapy

Collecting scrapy

Downloading Scrapy-1.4.0-py2.py3-none-any.whl (248kB)

100% |████████████████████████████████| 256kB 96kB/s

Collecting parsel>=1.1 (from scrapy)

Downloading parsel-1.2.0-py2.py3-none-any.whl

Collecting service-identity (from scrapy)

Downloading service_identity-17.0.0-py2.py3-none-any.whl

Collecting lxml (from scrapy)

Downloading lxml-4.1.1-cp27-cp27m-macosx_10_6_intel.macosx_10_9_intel.macosx_10_9_x86_64.macosx_10_10_intel.macosx_10_10_x86_64.whl (8.7MB)

100% |████████████████████████████████| 8.7MB 41kB/s

Collecting cssselect>=0.9 (from scrapy)

Downloading cssselect-1.0.1-py2.py3-none-any.whl

Collecting w3lib>=1.17.0 (from scrapy)

Downloading w3lib-1.18.0-py2.py3-none-any.whl

Collecting queuelib (from scrapy)

Downloading queuelib-1.4.2-py2.py3-none-any.whl

Collecting PyDispatcher>=2.0.5 (from scrapy)

Downloading PyDispatcher-2.0.5.tar.gz

Collecting pyOpenSSL (from scrapy)

Downloading pyOpenSSL-17.5.0-py2.py3-none-any.whl (53kB)

100% |████████████████████████████████| 61kB 32kB/s

Collecting Twisted>=13.1.0 (from scrapy)

Downloading Twisted-17.9.0.tar.bz2 (3.0MB)

100% |████████████████████████████████| 3.0MB 21kB/s

Requirement already satisfied: six>=1.5.2 in /usr/local/lib/python2.7/site-packages (from scrapy)

Collecting pyasn1-modules (from service-identity->scrapy)

Downloading pyasn1_modules-0.2.1-py2.py3-none-any.whl (60kB)

100% |████████████████████████████████| 61kB 37kB/s

Collecting attrs (from service-identity->scrapy)

Downloading attrs-17.3.0-py2.py3-none-any.whl

Collecting pyasn1 (from service-identity->scrapy)

Downloading pyasn1-0.4.2-py2.py3-none-any.whl (71kB)

100% |████████████████████████████████| 71kB 38kB/s

Collecting cryptography>=2.1.4 (from pyOpenSSL->scrapy)

Downloading cryptography-2.1.4-cp27-cp27m-macosx_10_6_intel.whl (1.5MB)

100% |████████████████████████████████| 1.5MB 26kB/s

Collecting zope.interface>=3.6.0 (from Twisted>=13.1.0->scrapy)

Downloading zope.interface-4.4.3.tar.gz (147kB)

100% |████████████████████████████████| 153kB 67kB/s

Collecting constantly>=15.1 (from Twisted>=13.1.0->scrapy)

Downloading constantly-15.1.0-py2.py3-none-any.whl

Collecting incremental>=16.10.1 (from Twisted>=13.1.0->scrapy)

Downloading incremental-17.5.0-py2.py3-none-any.whl

Collecting Automat>=0.3.0 (from Twisted>=13.1.0->scrapy)

Downloading Automat-0.6.0-py2.py3-none-any.whl

Collecting hyperlink>=17.1.1 (from Twisted>=13.1.0->scrapy)

Downloading hyperlink-17.3.1-py2.py3-none-any.whl (73kB)

100% |████████████████████████████████| 81kB 67kB/s

Requirement already satisfied: idna>=2.1 in /usr/local/lib/python2.7/site-packages (from cryptography>=2.1.4->pyOpenSSL->scrapy)

Collecting cffi>=1.7; platform_python_implementation != “PyPy” (from cryptography>=2.1.4->pyOpenSSL->scrapy)

Downloading cffi-1.11.2-cp27-cp27m-macosx_10_6_intel.whl (238kB)

100% |████████████████████████████████| 245kB 43kB/s

Requirement already satisfied: enum34; python_version < “3” in /usr/local/lib/python2.7/site-packages (from cryptography>=2.1.4->pyOpenSSL->scrapy)

Collecting asn1crypto>=0.21.0 (from cryptography>=2.1.4->pyOpenSSL->scrapy)

Downloading asn1crypto-0.24.0-py2.py3-none-any.whl (101kB)

100% |████████████████████████████████| 102kB 42kB/s

Collecting ipaddress; python_version < “3” (from cryptography>=2.1.4->pyOpenSSL->scrapy)

Downloading ipaddress-1.0.19.tar.gz

Requirement already satisfied: setuptools in /usr/local/lib/python2.7/site-packages (from zope.interface>=3.6.0->Twisted>=13.1.0->scrapy)

Collecting pycparser (from cffi>=1.7; platform_python_implementation != “PyPy”->cryptography>=2.1.4->pyOpenSSL->scrapy)

Downloading pycparser-2.18.tar.gz (245kB)

100% |████████████████████████████████| 256kB 45kB/s

Building wheels for collected packages: PyDispatcher, Twisted, zope.interface, ipaddress, pycparser

Running setup.py bdist_wheel for PyDispatcher … done

Stored in directory: /Users/crifan/Library/Caches/pip/wheels/86/02/a1/5857c77600a28813aaf0f66d4e4568f50c9f133277a4122411

Running setup.py bdist_wheel for Twisted … done

Stored in directory: /Users/crifan/Library/Caches/pip/wheels/91/c7/95/0bb4d45bc4ed91375013e9b5f211ac3ebf4138d8858f84abbc

Running setup.py bdist_wheel for zope.interface … done

Stored in directory: /Users/crifan/Library/Caches/pip/wheels/8b/39/98/0fcb72adfb12b2547273b1164d952f093f267e0324d58b6955

Running setup.py bdist_wheel for ipaddress … done

Stored in directory: /Users/crifan/Library/Caches/pip/wheels/d7/6b/69/666188e8101897abb2e115d408d139a372bdf6bfa7abb5aef5

Running setup.py bdist_wheel for pycparser … done

Stored in directory: /Users/crifan/Library/Caches/pip/wheels/95/14/9a/5e7b9024459d2a6600aaa64e0ba485325aff7a9ac7489db1b6

Successfully built PyDispatcher Twisted zope.interface ipaddress pycparser

Installing collected packages: cssselect, lxml, w3lib, parsel, pyasn1, pyasn1-modules, attrs, pycparser, cffi, asn1crypto, ipaddress, cryptography, pyOpenSSL, service-identity, queuelib, PyDispatcher, zope.interface, constantly, incremental, Automat, hyperlink, Twisted, scrapy

Successfully installed Automat-0.6.0 PyDispatcher-2.0.5 Twisted-17.9.0 asn1crypto-0.24.0 attrs-17.3.0 cffi-1.11.2 constantly-15.1.0 cryptography-2.1.4 cssselect-1.0.1 hyperlink-17.3.1 incremental-17.5.0 ipaddress-1.0.19 lxml-4.1.1 parsel-1.2.0 pyOpenSSL-17.5.0 pyasn1-0.4.2 pyasn1-modules-0.2.1 pycparser-2.18 queuelib-1.4.2 scrapy-1.4.0 service-identity-17.0.0 w3lib-1.18.0 zope.interface-4.4.3

看了:

感觉后续会涉及到:

用交互式shell终端,调试页面抓取的语法

用media pipeline下载音视频文件

先去看看有哪些命令:

➜ scrapy scrapy –help

Scrapy 1.4.0 – no active project

Usage:

scrapy <command> [options] [args]

Available commands:

bench Run quick benchmark test

fetch Fetch a URL using the Scrapy downloader

genspider Generate new spider using pre-defined templates

runspider Run a self-contained spider (without creating a project)

settings Get settings values

shell Interactive scraping console

startproject Create new project

version Print Scrapy version

view Open URL in browser, as seen by Scrapy

[ more ] More commands available when run from project directory

Use “scrapy <command> -h” to see more info about a command

再去看看其他一些子命令的具体参数:

➜ scrapy scrapy startproject -h

Usage

=====

scrapy startproject <project_name> [project_dir]

Create new project

Options

=======

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<help, -h show this help message and exit

Global Options

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<————

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<logfile=FILE log file. if omitted stderr will be used

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<loglevel=LEVEL, -L LEVEL

log level (default: DEBUG)

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<nolog disable logging completely

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<profile=FILE write python cProfile stats to FILE

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<pidfile=FILE write process ID to FILE

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<set=NAME=VALUE, -s NAME=VALUE

set/override setting (may be repeated)

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<pdb enable pdb on failure

和:

➜ scrapy scrapy bench -h

Usage

=====

scrapy bench

Run quick benchmark test

Options

=======

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<help, -h show this help message and exit

Global Options

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<————

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<logfile=FILE log file. if omitted stderr will be used

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<loglevel=LEVEL, -L LEVEL

log level (default: INFO)

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<nolog disable logging completely

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<profile=FILE write python cProfile stats to FILE

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<pidfile=FILE write process ID to FILE

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<set=NAME=VALUE, -s NAME=VALUE

set/override setting (may be repeated)

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<pdb enable pdb on failure

➜ scrapy scrapy fetch -h

Usage

=====

scrapy fetch [options] <url>

Fetch a URL using the Scrapy downloader and print its content to stdout. You

may want to use –nolog to disable logging

Options

=======

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<help, -h show this help message and exit

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<spider=SPIDER use this spider

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<headers print response HTTP headers instead of body

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<no-redirect do not handle HTTP 3xx status codes and print response

as-is

Global Options

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<————

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<logfile=FILE log file. if omitted stderr will be used

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<loglevel=LEVEL, -L LEVEL

log level (default: DEBUG)

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<nolog disable logging completely

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<profile=FILE write python cProfile stats to FILE

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<pidfile=FILE write process ID to FILE

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<set=NAME=VALUE, -s NAME=VALUE

set/override setting (may be repeated)

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<pdb enable pdb on failure

➜ scrapy scrapy shell -h

Usage

=====

scrapy shell [url|file]

Interactive console for scraping the given url

Options

=======

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<help, -h show this help message and exit

-c CODE evaluate the code in the shell, print the result and

exit

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<spider=SPIDER use this spider

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<no-redirect do not handle HTTP 3xx status codes and print response

as-is

Global Options

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<————

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<logfile=FILE log file. if omitted stderr will be used

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<loglevel=LEVEL, -L LEVEL

log level (default: DEBUG)

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<nolog disable logging completely

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<profile=FILE write python cProfile stats to FILE

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<pidfile=FILE write process ID to FILE

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<set=NAME=VALUE, -s NAME=VALUE

set/override setting (may be repeated)

<span style="font-size: 12px; color: rgb(51, 51, 51); font-family: Monaco;"–<pdb enable pdb on failure

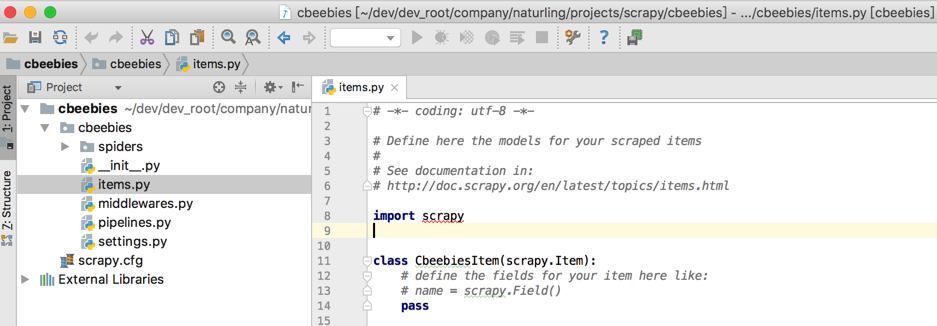

然后去创建项目

➜ scrapy scrapy startproject cbeebies

New Scrapy project ‘cbeebies’, using template directory ‘/usr/local/lib/python2.7/site-packages/scrapy/templates/project’, created in:

/Users/crifan/dev/dev_root/company/naturling/projects/scrapy/cbeebies

You can start your first spider with:

cd cbeebies

scrapy genspider example example.com

➜ scrapy pwd

/Users/crifan/dev/dev_root/company/naturling/projects/scrapy

➜ scrapy ll

total 0

drwxr-xr-x 4 crifan staff 128B 12 26 22:50 cbeebies

➜ scrapy cd cbeebies

➜ cbeebies ll

total 8

drwxr-xr-x 8 crifan staff 256B 12 26 22:50 cbeebies

-rw-r–r– 1 crifan staff 260B 12 26 22:50 scrapy.cfg

➜ cbeebies cd cbeebies

➜ cbeebies ll

total 32

-rw-r–r– 1 crifan staff 0B 12 26 20:41 __init__.py

-rw-r–r– 1 crifan staff 287B 12 26 22:50 items.py

-rw-r–r– 1 crifan staff 1.9K 12 26 22:50 middlewares.py

-rw-r–r– 1 crifan staff 288B 12 26 22:50 pipelines.py

-rw-r–r– 1 crifan staff 3.1K 12 26 22:50 settings.py

drwxr-xr-x 3 crifan staff 96B 12 26 20:49 spiders

去看看:

然后进去项目根目录,看看有哪些其他命令:

➜ cbeebies pwd

/Users/crifan/dev/dev_root/company/naturling/projects/scrapy/cbeebies

➜ cbeebies ll

total 8

drwxr-xr-x 10 crifan staff 320B 12 26 23:07 cbeebies

-rw-r–r– 1 crifan staff 260B 12 26 22:50 scrapy.cfg

➜ cbeebies scrapy –help

Scrapy 1.4.0 – project: cbeebies

Usage:

scrapy <command> [options] [args]

Available commands:

bench Run quick benchmark test

check Check spider contracts

crawl Run a spider

edit Edit spider

fetch Fetch a URL using the Scrapy downloader

genspider Generate new spider using pre-defined templates

list List available spiders

parse Parse URL (using its spider) and print the results

runspider Run a self-contained spider (without creating a project)

settings Get settings values

shell Interactive scraping console

startproject Create new project

version Print Scrapy version

view Open URL in browser, as seen by Scrapy

Use “scrapy <command> -h” to see more info about a command

用爬虫类的小写名,会报错:

➜ cbeebies scrapy crawl cbeebies

2017-12-26 23:08:39 [scrapy.utils.log] INFO: Scrapy 1.4.0 started (bot: cbeebies)

2017-12-26 23:08:39 [scrapy.utils.log] INFO: Overridden settings: {‘NEWSPIDER_MODULE’: ‘cbeebies.spiders’, ‘SPIDER_MODULES’: [‘cbeebies.spiders’], ‘ROBOTSTXT_OBEY’: True, ‘BOT_NAME’: ‘cbeebies’}

Traceback (most recent call last):

File “/usr/local/bin/scrapy”, line 11, in <module>

sys.exit(execute())

File “/usr/local/lib/python2.7/site-packages/scrapy/cmdline.py”, line 149, in execute

_run_print_help(parser, _run_command, cmd, args, opts)

File “/usr/local/lib/python2.7/site-packages/scrapy/cmdline.py”, line 89, in _run_print_help

func(*a, **kw)

File “/usr/local/lib/python2.7/site-packages/scrapy/cmdline.py”, line 156, in _run_command

cmd.run(args, opts)

File “/usr/local/lib/python2.7/site-packages/scrapy/commands/crawl.py”, line 57, in run

self.crawler_process.crawl(spname, **opts.spargs)

File “/usr/local/lib/python2.7/site-packages/scrapy/crawler.py”, line 167, in crawl

crawler = self.create_crawler(crawler_or_spidercls)

File “/usr/local/lib/python2.7/site-packages/scrapy/crawler.py”, line 195, in create_crawler

return self._create_crawler(crawler_or_spidercls)

File “/usr/local/lib/python2.7/site-packages/scrapy/crawler.py”, line 199, in _create_crawler

spidercls = self.spider_loader.load(spidercls)

File “/usr/local/lib/python2.7/site-packages/scrapy/spiderloader.py”, line 71, in load

raise KeyError(“Spider not found: {}”.format(spider_name))

KeyError: ‘Spider not found: cbeebies’

换用大写的,即可:

➜ cbeebies scrapy crawl Cbeebies

2017-12-26 23:09:00 [scrapy.utils.log] INFO: Scrapy 1.4.0 started (bot: cbeebies)

2017-12-26 23:09:00 [scrapy.utils.log] INFO: Overridden settings: {‘NEWSPIDER_MODULE’: ‘cbeebies.spiders’, ‘SPIDER_MODULES’: [‘cbeebies.spiders’], ‘ROBOTSTXT_OBEY’: True, ‘BOT_NAME’: ‘cbeebies’}

2017-12-26 23:09:00 [scrapy.middleware] INFO: Enabled extensions:

[‘scrapy.extensions.memusage.MemoryUsage’,

‘scrapy.extensions.logstats.LogStats’,

‘scrapy.extensions.telnet.TelnetConsole’,

‘scrapy.extensions.corestats.CoreStats’]

2017-12-26 23:09:00 [scrapy.middleware] INFO: Enabled downloader middlewares:

[‘scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware’,

‘scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware’,

‘scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware’,

‘scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware’,

‘scrapy.downloadermiddlewares.useragent.UserAgentMiddleware’,

‘scrapy.downloadermiddlewares.retry.RetryMiddleware’,

‘scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware’,

‘scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware’,

‘scrapy.downloadermiddlewares.redirect.RedirectMiddleware’,

‘scrapy.downloadermiddlewares.cookies.CookiesMiddleware’,

‘scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware’,

‘scrapy.downloadermiddlewares.stats.DownloaderStats’]

2017-12-26 23:09:00 [scrapy.middleware] INFO: Enabled spider middlewares:

[‘scrapy.spidermiddlewares.httperror.HttpErrorMiddleware’,

‘scrapy.spidermiddlewares.offsite.OffsiteMiddleware’,

‘scrapy.spidermiddlewares.referer.RefererMiddleware’,

‘scrapy.spidermiddlewares.urllength.UrlLengthMiddleware’,

‘scrapy.spidermiddlewares.depth.DepthMiddleware’]

2017-12-26 23:09:00 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2017-12-26 23:09:00 [scrapy.core.engine] INFO: Spider opened

2017-12-26 23:09:00 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2017-12-26 23:09:00 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2017-12-26 23:09:02 [scrapy.downloadermiddlewares.redirect] DEBUG: Redirecting (301) to <GET http://global.cbeebies.com/> from <GET http://us.cbeebies.com/robots.txt>

2017-12-26 23:09:03 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://global.cbeebies.com/> (referer: None)

2017-12-26 23:09:04 [scrapy.downloadermiddlewares.redirect] DEBUG: Redirecting (301) to <GET http://global.cbeebies.com/> from <GET http://us.cbeebies.com/watch-and-sing/>

2017-12-26 23:09:04 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://global.cbeebies.com/robots.txt> (referer: None)

2017-12-26 23:09:04 [scrapy.downloadermiddlewares.redirect] DEBUG: Redirecting (301) to <GET http://global.cbeebies.com/> from <GET http://us.cbeebies.com/shows/>

2017-12-26 23:09:04 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://global.cbeebies.com/> (referer: None)

response.url=http://global.cbeebies.com/

2017-12-26 23:09:05 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://global.cbeebies.com/> (referer: None)

response.url=http://global.cbeebies.com/

2017-12-26 23:09:05 [scrapy.core.engine] INFO: Closing spider (finished)

2017-12-26 23:09:05 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{‘downloader/request_bytes’: 1548,

‘downloader/request_count’: 7,

‘downloader/request_method_count/GET’: 7,

‘downloader/response_bytes’: 12888,

‘downloader/response_count’: 7,

‘downloader/response_status_count/200’: 4,

‘downloader/response_status_count/301’: 3,

‘finish_reason’: ‘finished’,

‘finish_time’: datetime.datetime(2017, 12, 26, 15, 9, 5, 194303),

‘log_count/DEBUG’: 8,

‘log_count/INFO’: 7,

‘memusage/max’: 50208768,

‘memusage/startup’: 50204672,

‘response_received_count’: 4,

‘scheduler/dequeued’: 4,

‘scheduler/dequeued/memory’: 4,

‘scheduler/enqueued’: 4,

‘scheduler/enqueued/memory’: 4,

‘start_time’: datetime.datetime(2017, 12, 26, 15, 9, 0, 792051)}

2017-12-26 23:09:05 [scrapy.core.engine] INFO: Spider closed (finished)

➜ cbeebies

然后可以生成对应的html文件:

然后接着尝试去:

【已解决】Mac中PyCharm中去加断点实时调试scrapy的项目

此时已经可以获取抓取的页面返回的response.body的html内容了。

接着考虑如何:

解析,抓取出,后续需要处理的url,

如何传递给scrapy告诉后续继续处理这些url

【记录】尝试Scrapy shell去提取cbeebies.com页面中的子url

转载请注明:在路上 » 【记录】用Python的Scrapy去爬取cbeebies.com